- January 7, 2020

- wladone3

- Natural Language Processing

If you have a business with a heavy customer service demand, and you want to make your process more efficient, it’s time to think about introducing chatbots. In this blog post, we’ll cover some standard methods for implementing chatbots that can be used by any B2C business.

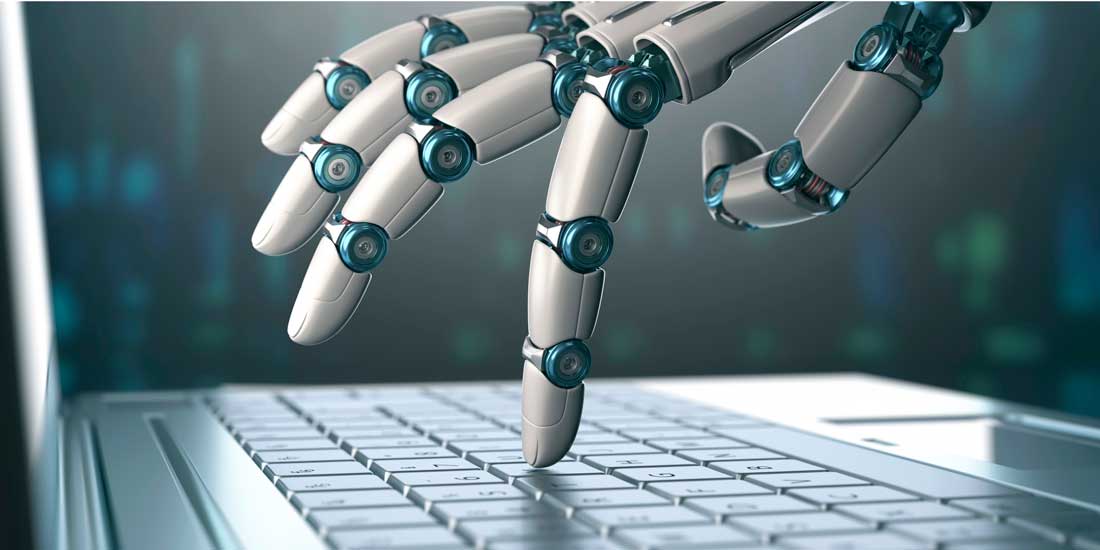

1. Chatbots

Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium doloremque laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Nemo enim ipsam voluptatem quia voluptas sit aspernatur aut odit aut fugit, sed quia consequuntur magni dolores eos qui ratione voluptatem sequi nesciunt.

The most important use cases of Natural Language Processing are:

Document Summarization is a set of methods for creating short, meaningful descriptions of long texts (i.e. documents, research papers).

2. Deep learning

At this point, your data is prepared and you have chosen the right kind of chatbot for your needs. You will have a sufficient corpora of text on which your machine can learn, and you are ready to begin the process of teaching your bot. In the case of a retrieval model bot, the teaching process consists of taking in an input a context (a conversation with a client with all prior sentences) and outputting a potential answer based on what it read. Google Assistant is using retrieval-based model (Smart Reply – Google). Which can help give you an idea of what it looks like.

3. Conclusion

All the data that is used for either building or testing the ML model is called a dataset. Basically, data scientists divide their datasets into three separate groups:

- Training data is used to train a model. It means that ML model sees that data and learns to detect patterns or determine which features are most important during prediction.

- Validation data is used for tuning model parameters and comparing different models in order to determine the best ones. The validation data should be different from the training data, and should not be used in the training phase. Otherwise, the model would overfit, and poorly generalize to the new (production) data.

- It may seem tedious, but there is always a third, final test set (also often called a hold-out). It is used once the final model is chosen to simulate the model’s behaviour on a completely unseen data, i.e. data points that weren’t used in building models or even in deciding which model to choose.

It’s not by any means exhaustive, but a good, light read prep before a meeting with an AI director or vendor – or a quick revisit before a job interview!

Aron Larsson

– CEO, Strategy Director

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

Comments (2)

Leave A Comment Click here to cancel reply.

Headings

- Artificial Intelligence (1)

- Business (4)

- Natural Language Processing (1)

- NLP (1)

- Technology (4)

Lina

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Cras porta lacus lorem desio interdum lacus ac purus rhon cus, consequat viverra diam vehiculas.

Jayz Marcop

Excepteur sint occaecat cupidatat non proident, sunt in culpa qui officia deserunt mollit anim id est laborum.